Unoccupied Aerial Vehicle (UAV) Deployment and Data Guidance

Purposes, definitions and guidance

Version 1.0 - 22 November 2022

Introduction

This document provides guidance on UAV - unoccupied aerial vehicle - deployment and data products for a variety of Peatland ACTION Programme monitoring purposes, most of which could apply to other NatureScot projects.

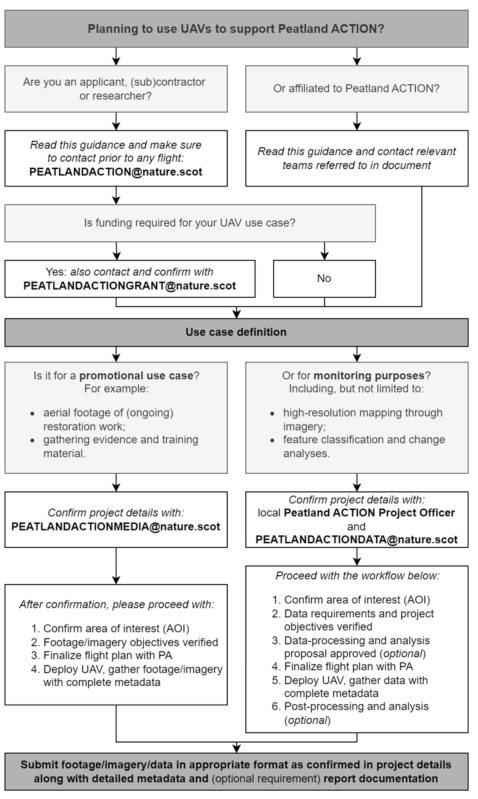

Two main applications of UAVs are covered in this document: promotional and monitoring purposes. For each purpose – and variations within these applications – information and examples are provided as guidance for field deployment and subsequent data processing/storage. The main focus of this document is to outline the peatland-related use case. It is written mainly for internal use across the Peatland ACTION Programme and for (sub-)contractors, collaborators (such as researchers and industry) and other organisations or persons that want to use UAVs for their data collection. Figure 1 illustrates the workflow.

Included are examples of UAV platforms and sensors, and software for planning and processing. Guidance on promotional use and monitoring with UAVs is provided including guidelines on data quality and metadata requirements. Additionally, a glossary is provided as well as a list of useful references.

For further information about this guidance please email [email protected]

1. Planning to use UAVs to support Peatland ACTION?

- Are you an applicant, (sub)contractor or researcher?

- Read this guidance and make sure to contact prior to any flight: [email protected]

- Is funding required for your UAV use case?

- Yes: also contact and confirm with

[email protected]

- Yes: also contact and confirm with

- No

- Is funding required for your UAV use case?

- Read this guidance and make sure to contact prior to any flight: [email protected]

- Or affiliated to Peatland ACTION?

- Read this guidance and contact relevant teams referred to in document

2. Use case definition

- Is it for a promotional purposes? For example: aerial footage of (ongoing) restoration work; gathering evidence and training material.

- Confirm project details with:

[email protected]- After confirmation, please proceed with:

- Confirm project details with:

1. Confirm area of interest (AOI)

2. Footage/imagery objectives verified

3. Finalize flight plan with PA

4. Deploy UAV, gather footage/imagery with complete metadata

- Or for monitoring purposes? Including, but not limited to: high-resolution mapping through imagery; feature classification and change analyses.

- Confirm project details with: local Peatland ACTION Project Officer and

[email protected]- Proceed with the workflow below:

- Confirm project details with: local Peatland ACTION Project Officer and

1. Confirm area of interest (AOI)

2. Data requirements and project objectives verified

3. Data-processing and analysis proposal approved (optional)

4. Finalize flight plan with PA

5. Deploy UAV, gather data with complete metadata

6. Post-processing and analysis (optional)

3. Submit footage/imagery/data in appropriate format as confirmed in project details along with detailed metadata and (optional requirement) report documentation.

Overview

Currently, UAVs – or drones – are used to obtain aerial perspectives of peatland restoration sites without any specific guidance on minimal data quality requirements, licensing issues and potential research purposes within Peatland ACTION. Depending on the final use of the obtained data, there are a number of things that can be considered:

- What is the project goal? For example with regard to pre- and post-restoration sites:

- Vegetation or habitat inventory

- Ecological status or health indication

- Obtaining promotional material

- Which platform is available? Can this limit a particular application:

- Standard RGB (red-green-blue) camera

- High-end multi- or hyperspectral camera

- Thermal or LiDAR sensors (not further discussed in this guidance, as requires specialised workflows)

A variety of projects involving UAVs within NatureScot and across our partners have been successful and/or have addressed limitations and advantages of different applications. Past projects, reports and handbooks include, but are not limited to:

- Scottish Invasive Species Initiative: detecting invasive non-native species along rivers and watercourses in northern Scotland;

- NatureScot Research Report 1286: seagrass restoration in Scotland – handbook and guidance;

- Conserving Bogs – The Management Handbook 2nd Edition: a cookbook of methods and techniques to help people effectively manage and conserve bogs.

Commercial Drone Operations

It is important that prior to any commercial drone operation (e.g. contracted surveys and promotional imagery acquisition across the Peatland ACTION Programme) every pilot needs to have a proper licence and, depending on the type of UAV, insurance. This guidance does not cover how to obtain a licence or insurance. You should follow information provided by the Chief Aviation Authority and Drone Code.

Note: You will need to have permission from the landowner to fly over their land. Be responsible and check with (local) authorities and NatureScot to ensure it is safe to fly your UAV and to conduct UAV surveys where and when you want. Flights in protected areas or near protected species, close to livestock and during the bird breeding season can be restricted.

Peatland ACTION Programme Plan integration

UAVs can be used for promotional and monitoring purposes linked to the Peatland ACTION Monitoring Strategy and Communications Plan.

Note: UAVs are not a mandatory or prioritised tool to be used at any stage of the Peatland ACTION funding application process. This guidance merely provides insight into the minimal requirements if the applicant, contractor or surveyor decides to acquire UAV-data.

Monitoring Strategy

Any UAV flight undertaken as part of a Peatland ACTION funded or supported project should aim to contribute to one of the three identified objectives in the Peatland ACTION Monitoring Strategy. This Strategy provides guidance for anyone wanting to contribute to the overall aim: to assess and improve the effectiveness of Peatland ACTION’s peatland restoration.

The strategy has two types of monitoring associated with peatland restoration:

Activity monitoring (what are we doing?) – measures indicators that reflect the implementation of peatland restoration activities.

Outcome monitoring (is it working?) – measures indicators that represent the objectives of peatland restoration; the effectiveness of the implemented restoration activities.

Communications Plan

UAVs are a powerful tool to get a bird’s eye view of a site and can help provide meaningful context to peatland restoration management decisions. Both images and video footage can be used on a wide variety of (social) media platforms. The benefit of using UAVs is that off-the-shelf UAVs are often sufficiently equipped to obtain good material. Training for these platforms is minimal, yet some experience will increase the quality of the footage and images.

Similar to the monitoring purposes, decisions on time of year, feature exposure and site conditions will have to be made in order to contribute appropriate promotional material.

UAV flights for promoting the Peatland ACTION Programme must align with our Communications Plan priorities. The integration will help determine the value of the proposed footage acquisition and help the user to prioritise outputs. In summary, there are two main priorities for any promotional UAV flight:

Priorities

- To encourage potential applicants to apply for funding and provide support to existing funding beneficiaries;

- To promote the benefits of peatland restoration to our key audiences.

- Prioritise the production of promotional material that communicates the benefits of peatland restoration to our target audiences, including through the use of Case Studies;

- Research and write material to promote the project through traditional and social media outlets;

- Lead and provide support in running events, open days, and awareness raising activities to encourage applications for funding, and to raise understanding of the value of our peatland resource as part of the broader climate change agenda;

- Contribute to reports on the performance of the project against the Communications plan objectives;

- Support communications by developing the necessary partnership approach with landowners, land managers, NatureScot staff and other Peatland ACTION Project Officers.

More detail about these actions can be found in the Communications Plan.

UAV platforms and sensors

There is no specific guidance on what UAVs must be used for monitoring or promotional surveys and the choice often depends on the use case. Listed below are examples of platforms and sensors commonly used for these kinds of applications.

UAVs

There are two main types of UAVs: rotorcraft and fixed-wing platforms (Table 1). For both types, a wide range of UAVs are available (including legacy models that are still in use, i.e. older DJI Phantom models), ranging from ‘consumer’-grade types to high-end machines, which can all be fitted with various camera systems and other sensors too. The preferred platform and sensor will depend on the requirements of each individual Monitoring purposes.

Note: for some use cases additional equipment such as highly accurate GNSS/GPS are required on board the UAV and/or separately to capture ground control points (GCPs). These solutions come with a cost, but can significantly improve data precision required for some data products.

| - |

Rotorcraft |

Fixed-wing |

|---|---|---|

|

Description |

Rotorcraft generally include multi-rotor UAVs (although single-rotor platforms do exist) ranging between 4 and 8 rotors that allow the aircraft to hover for precise deployment. |

Fixed-wing UAVs are modelled after convential planes and can be used to cover greater distances due to their efficient design and propulsion system, making them ideal for monitoring large areas. |

|

Advantages |

Relative easy to launch (Vertical Take Off and Landing, VTOL), maneauverability and 3-axis camera targeting (with exceptions), hovering capability. |

Long range and flight times, quiet operation, multiple sensor options, faster data collection for orthomosaics. |

|

Limitations |

Shorter flight times (around 30 minutes), requires greater piloting skills in manual mode (compared to fixed-wing). |

Horizontal take-off (with exceptions), generally more expensive, not convenient for promotional purposes, limited payload options. |

|

Example platforms |

DJI Phantom 3, 4 and 4 RTK, Yuneec H520, Leica Aibot SX, Swellpro Splashdrone 3 or 4, DJI Inspire, DJI Mavic |

senseFly eBee (Plus), Trimble UX5, QuestUAV DATAhawk (PPK), Mavinci Sirius Pro, Wingtra WingtraOne |

Cameras

Most off-the-shelf UAVs have an integrated optical camera system. However, scientific developments and commercial applications have driven the manufacturers and UAV communities to start to include alternative cameras and sensors to improve data collection options from these platforms (Table 2). For promotional purposes, a ‘standard’ high-resolution camera often meets the requirements for adequate data collection. But depending on the monitoring purposes, bespoke multiband camera sensor alternatives can be used to capture a wider range of spectral information from a scene (i.e. reflectance in the near-infrared (NIR), multi- or hyperspectral range).

Note: please check sensor compatibility with UAV platforms and refer to the manufacturer’s guidance for additional information on for example, camera shutter type and speed, lens focal length, dimensions and weight, spectral band ranges, image/video resolutions and storage.

|

Model |

Effective pixels |

Bands* |

Integration |

|---|---|---|---|

|

DJI Phantom 4 | Pro |

12 | 20 MP |

RGB |

UAV-integrated |

|

DJI Phantom 4 Multispectral |

2 MP |

RGB+NIR+RE |

UAV-integrated |

|

DJI Zenmuse |

24 MP |

RGB |

Fixed DJI mount |

|

MAPIR Survey3 |

12 MP |

Options: RGB, OCN, RGN, NGB, RE, NIR |

Various mount options |

|

MicaSense RedEdge-MX |

1.2 MP |

RGB+NIR+RE |

Various mount options |

|

Parrot Sequoia+ |

1.2 MP |

RGN+RE |

Various mount options |

|

senseFly S.O.D.A. |

20 MP |

RGB |

Fixed eBee mount |

|

Sentera 6X |

3.2 or 20 MP |

RGB+NIR+RE (20 MP RGB) |

Various mount options |

|

YuSense MS600 Pro |

1.2 MP |

RGB+NIR+RE |

Fixed DJI mount |

* RGB = red, green, blue; NIR = near-infrared; OCN = orange, cyan, NIR; RE = red-edge; RGN = red, green, NIR

Apart from changing complete cameras, other changes such as lenses and sensor sizes, which can result in changes in resolutions and image quality (i.e., including NIR wavelengths by removing a filter on a camera) can be applied as well. This often includes modifying the original camera or auxiliary add-on sensors on a UAV platform and should be done with professional guidance.

Other sensors

Apart from optical cameras, UAVs can also be fitted with other payloads to cover specialised jobs within the project: including hyperspectral or thermal sensors, as well as LiDAR (Table 3). For promotional purposes, these sensors are excess, but for monitoring these tools can be useful in obtaining proxies for plant health, water quality or soil condition, for example.

|

Type |

Model |

Data type |

Description |

Integration |

|---|---|---|---|---|

|

Hyperspectral sensors |

- |

- |

- |

- |

|

- |

Haip Blackbird |

Spectral signature |

Miniature hyperspectral camera capable of obtaining spectral reflectance across 250 bands between 500-1,000 nm |

Fixed DJI mount |

|

- |

Headwall Hyperspec SWIR |

Spectral signature |

Hyperspectral camera for obtaining spectral signatures between 900-2,500 nm |

Various mount options |

|

LiDAR |

- |

- |

- |

- |

|

- |

Routescene UAV LidarPod |

Point cloud |

Obtain LiDAR point cloud data between 0-100 m elevation |

Various mount options |

|

Thermal sensors |

- |

- |

- |

- |

|

- |

Micasense Altum-PT |

Imagery |

Capture pixel-aligned data across multispectral, panchromatic and thermal (8,000-14,000 nm) ranges |

Various mount options |

These specific non-optical sensors also often require tailored data processing and interpretation workflows, which are not covered in this guidance. Users are referred to the manufacturer’s guidance, GitHub tutorials or through the scientific community (literature or ResearchGate for example).

Software

Along with the hardware, software is required in order to fly the UAV across the area of interest (AOI). A number of software options are also available for post-flight data processing and some options – currently supporting a wide range of UAV platforms and sensors – are included below.

Flight planning and image processing

Agisoft Metashape – software product capable of performing photogrammetric processing of images and generating 3D spatial data.

DJI GS Pro – flight (data) management app for DJI platforms. More options compared to the equally handy (free) DJI Go application, as it allows for planning waypoints and 3D mapping routes.

Drone2Map – fully integrated ArcGIS Pro software product by ESRI able to post-process data obtained using the Site Scan Flight app or any third-party application.

DroneDeploy – software bundle of flight and data processing solutions, supporting a wide range of rotorcraft and fixed-wing UAVs. Various pricing schemes, yet a wide range of options, including for post-flight data processing.

Maps Made Easy – online-based UAV-image processing environment with various subscription tiers, however free processing is possible for small AOIs. Various data formats are available for download, such as an orthomosaic and digital surface models. Works with Map Pilot Pro, an alternative UAV flight planning app with more capabilities (e.g., RAW image data collection) at increasing pricing rates.

Opendronemap – open-source toolkit to process UAV-imagery. Freely available through GitHub, supports a wide variety of data input formats and includes many output options for post-processing steps.

Pix4D – software package (i.e., Pix4Dmapper, Pix4Dcapture) with photogrammetry capabilities, including subsequent analysis, storage and sharing of the data.

Note: This is not a full list of recommended or accepted software packages, and users are free to trial and utilise different, free or paid for, apps as discussed and agreed on in conversations with Peatland ACTION and/or NatureScot.

GIS and data analysis

Specialised software is required to view and analyse the UAV-derived imagery and other data products when UAVs are used for monitoring purposes. Commonly used GIS packages such as the free, open-source QGIS platform or ESRI’s proprietary ArcGIS (Pro) software, support any of the data processing steps covered in this guidance.

With regard to image analyses, such as classification, modelling or feature extraction, additional software packages, plugins or solutions are available through Python or R for example. Also other proprietary software solutions with comparable capabilities are available, including but not limited to, eCognition or MATLAB.

Promotional purposes

For most promotional purposes, the required data output includes either a set of aerial, oblique images, or a video captured during the UAV flight. Generally, this kind of data is relatively straightforward to obtain but does require flight planning software and field preparations.

Images

Generally, obtaining imagery for promotional use is the most straightforward application of a UAV camera. There is a wide variety of image types that can be captured and no single guidance will be able to cover all techniques and settings. For example, straight down, a vertical image can be obtained, or tilted: resulting in a high oblique (with a visible horizon) or low oblique image. In most cases, oblique footage (both imagery and video) can be very valuable to showcase an area, as they enable highlighting topographic detail: e.g. exploring gullies in an eroded peatland, ongoing tree-felling or slope-reprofiling, emphasising on the vastness of a landscape. When in doubt about what effect is most suitable for a particular scene, contact the end user, or the Peatland ACTION Communications Team. Furthermore, these (individual) images can potentially be used beyond promotional use, for example change analysis providing the exact same scene is captured in subsequent flights.

Video

Similar to promotional imagery, UAV-derived video can be obtained using a variety of flight strategies or camera configurations. Most applications involve using a ‘standard’ UAV-fitted optical camera to capture high-definition video (HD), ranging from 1080p (Full HD) to 4K and beyond. Simple flight paths and filming angles (achieved with rotorcraft UAV) are advised, with additional lowered gimbal speeds (to improve scene transition quality) and optional camera filters to reduce glare, i.e. a neutral density filter.

Overall, footage at 25 FPS (with 1/50s shutter speed) in .mov format are preferred. However, together with the applicant, land owners/users, and the Peatland ACTION Communications Team, these details can be discussed. Furthermore, a general outline – or storyboard – of ‘shots’ should be agreed prior footage collection.

Monitoring purposes

In line with the objectives from the Monitoring Strategy, UAVs can be deployed to monitor a variety of parameters (e.g. bare peat cover, vegetation change, erosion rates). This results from their capability to obtain high-resolution imagery (and other data) at high frequencies across a restoration footprint. In conjunction with walk-over surveys and satellite remote sensing products, UAVs can provide additional detail in remote areas with difficult terrain. This makes UAVs especially useful for planning restoration work and monitoring the effect of several restoration techniques. This section will highlight the advantages and limitations of common UAV-derived data that can be used within the wider monitoring purposes.

High-resolution imagery and digital surface models

High-resolution imagery and digital surface models (DSMs) are among the most commonly used UAV-derived data products for monitoring purposes. For peatland habitats, these datasets can help identify land cover types, estimate vegetation health or describe overall site condition, and aid mapping of hydrological and erosion features. Furthermore, a series of UAV flights across the same AOI can facilitate change-analysis.

This UAV guidance document only considers orthomosaics (i.e. aerial imagery, corrected for topography, created from a large number of overlapping images) and DSMs (i.e., digital surface model in raster format), either with or without additional ground control point (GCP) corrections. Since there is a myriad of UAV platform and camera configurations to consider, the guidance considers three levels of UAV orthomosaic and DSM quality (Table 4).

The orthomosaic and DSM quality levels are primarily linked to ground-sampling distance (GSD) (data spatial resolution) and associated with various degrees of quality and application value for subsequent data analysis techniques (e.g. land cover classification). For Peatland ACTION work (i.e. feasibility studies, spatial data provided for funding applications and subsequent monitoring of restoration work), there are a number of minimal data quality requirements that need to be considered, unless agreed otherwise. Generally, Level 1 datasets can be used at all stages of a Peatland ACTION project, whereas for more detailed mapping and monitoring exercises, Level 2 would be preferred, without (Level 2a) or with (Level 2b) additional GCPs. For specific monitoring of for example erosion features or species-specific mapping, Level 3 data can be opted for. Analyses of change are possible for all levels, but can be limited in their accuracy and precision.

|

Level |

Requirements |

- |

- |

Description |

- |

- |

|---|---|---|---|---|---|---|

|

- |

Ground Sampling Distance (GSD) |

Ground Control Points (GCPs) |

Multiband |

Application of orthomosaics and DSMs |

Pros |

Cons |

|

1 |

> 5 cm |

Optional |

Recommended scale |

General base map generation and elevation data, useful for basic mapping procedures and with multiband data, allowing for spectral vegetation indices calculations |

Most likely scale for multiband (beyond RGB) data, largest area option, relatively easy to process |

Loss of detail, without GCPs potential errors in georeferencing and DSM quality |

|

2a |

2 – 5 cm |

Optional |

Possible |

Detailed base map and elevation data, useful as reference material and (automatic) feature mapping |

Common range of UAV GSD, high detail to cost ratio |

Increasing data storage and processing requirements, requires GCPs for change analyses purposes |

|

2b |

2 – 5 cm |

Required |

Possible |

Same as level 2a, with additional analysis options due to increased spatial confidence |

Same as level 2a, with increased spatial accuracy allowing for change analysis |

Requires additional data quality assessment (i.e., GCP error), additional field preparations |

|

3 |

< 2 cm |

Required |

Unlikely |

Highly-detailed map and elevation data, valuable for comprehensive site evaluation and (automatic) feature extraction |

Enables detailed feature mapping, species and feature-specific classification options |

Requires accurate x,y and z GCP locations for volumetric analysis and large data storage and processing capabilities, unlikely range for multiband data |

All levels come with associated expenses, due to platform and sensor costs, as well as necessary processing and storage requirements. These are not considered for this guidance, but generally higher level datasets are linked to increased expense.

Using photogrammetry tools and associated algorithms, such as Structure-from-Motion (SfM) workflows, require sufficient spatial overlap of images in order to identify matching features across the set of images of the scene. Depending on the image quality (i.e., bands, resolution, flight conditions), slight alterations have to be made. For example, multiband sensors often yield lower resolution images (see Table 2), which require a lower flight altitude in order to obtain a meaningful ground-sampling distance (GSD) for the surveyed area. There are a number of online tools that can help calculate flight heights and GSD for a range of UAVs, such as the GSD calculator by Propeller Aero, but most flight planning software includes this feature.

When deploying GCPs, you should obtain accurate on ground-control point coordinates (similar or smaller error than required GSD) for all markers put out in the field. We recommend using a minimum of four GCPs for areas up to a hectare, although requirements can vary depending on site area and the hardware and software used.

Land cover and land use classification

There are many different techniques and outcomes available when trying to classify UAV-derived data. This guidance uses the land-use and land-cover (LULC) classification used for UAV-derived, airborne and satellite imagery data. Two important parts of the LULC classification have to be considered: the LULC classes to be classified and the classification technique. For UAV-derived imagery and digital surface models, both aspects are important, especially on peatland habitats due to their sometimes complex LULC.

LULC classes - Peatland

From a peatland restoration perspective, there are a handful of relevant land cover and land use classes preferred to classify from UAV-derived data including bare peat, Sphagnum mosses and water (see Table 5 for more examples).

| Class level | Cover type | Suggested colour | Description |

|---|---|---|---|

|

Primary classes |

|

|

|

|

- |

Bare peat |

Brown |

Areas with no vegetation cover, including dry pool systems |

|

- |

Sphagnum mosses |

Orange |

Dominant Sphagnum cover (>70%) |

|

- |

Water |

Blue |

Pools, streams and inundated peat hollows |

|

Secondary classes |

|

|

|

|

- |

Heather / shrubs |

Purple |

Ericoid shrub cover, may include herbacious species too |

|

- |

Sedges / grasses |

Green |

Graminoid cover, may include herbacious species as well |

|

- |

Trees |

Red |

Woodland or individual trees, native and/or non-native |

|

- |

Mineral soil |

White |

Found on severely degraded peatlands, where the underlying substrate is exposed |

Classified products can be spatial rasters or a set of polygons (i.e. part of a geopackage layer) for a monitoring purposes – advice on this point can be given by Peatland ACTION Data and Evidence Team.

Classification techniques

In order to increase confidence and accuracy of any classification technique at least one ground truth dataset has to be collected (number of points/polygons depends on the technique, cover types and area) as well in order to validate the UAV output. Sampling strategies for these training and validation datasets will have to be discussed upon submission of a Peatland ACTION as part of a feasibility study.

For the classification of input material we recommend supervised classification / machine learning algorithms (e.g. random forest, support vector machines or artificial neural networks). Other approaches such as cluster analyses (i.e. unsupervised classification) are less preferred due to their limited supervised input. Utilizing these tools requires dedicated software and often hardware requirements to be efficient. It is recommended to obtain the required skillset and/or reach out to the Peatland ACTION Data and Evidence Team, or NatureScot GIG Earth Observation Team if necessary. Please also refer to software and/or package support guidance documentation for additional details, or have a look through the list of references.

For classifying UAV-derived data most GIS and data analyses software packages can handle straightforward pixel-based approaches, whereas object-based segmentation require additional input as well as proprietary software in some instances.

Object-based (segmentation): clusters pixels with similar characteristics into ‘objects’ that can be related to different classes in your AOI. Classification of segmented UAV imagery can have higher accuracy with fewer training points, although clustering of pixels can reduce detail.

Feature identification, classification and extraction

For some applications, complete LULC classification is not required and other outcomes are required, such as (automatic) (individual) feature identification, classification and extraction. For Peatland ACTION applications or finalised projects, refer to the spatial data guidance for the most up to date list of identifiable features to ensure spatial data consistency across the project.

Peatland ACTION - feature identification

Although AOI, project aim and future data-use determine what features are to be identified and subsequently classified, the user needs to adhere to the standards described below.

Points: include single features identified in the UAV-derived data linked to for example, but not limited to, peatland restoration (e.g., dams), (monitoring) hardware (i.e., data-loggers, GCPs, posts) or vegetation or distinct topographic features (i.e., individual shrubs, points of minimum or maximum elevation, etc.);

Lines: where linear features can be mapped or extracted, lines can be used to identify features in the AOI, such as tracks, gullies, peat hags, streams, etc.

Polygons: individual areas can be outlined to highlight features in the AOI. A myriad of potential features are available, and depending on the monitoring purposes. Polygons can include for example bare peat areas or pools, certain vegetation compositions, buildings or other infrastructure.

For every feature – point, line or polygon – a minimal set of detailed information is required for use. However, this depends on the proposed use of the UAV-derived data, and should be checked with the Peatland ACTION Data and Evidence Team for peatland(-restoration) related use cases, or the NatureScot GIG Earth Observation Team.

Mapping and extraction approaches

Identification (classification) and extraction of point, linear and (no-fully covering) polygon data can be achieved with automatic workflows, but often manual delineation is preferred. This avoids obtaining any training datasets making validation straightforward.

Most manual mapping can be achieved by using a georeferenced UAV-derived orthophoto as a base map in a GIS. However, a DSM can also aid in identifying features that are of interest. Especially on (degraded) peatland sites, topographic information can aid in mapping for feasibility studies, applications and final reports.

Automatic identification and extraction techniques on peatlands can include hydrological modelling to identify watersheds and map streams. On high-resolution DSMs (all levels) this can help identify areas prone to inundation after drain-blocking and potentially automatically map volumetric changes.

Change analyses

In order to perform a change analysis, at least two UAV surveys – producing orthomosaics and/or DSMs of the same dataset level – have to be completed covering the same spatial extent. For Peatland ACTION, common use cases for monitoring include:

Image-based change: manual checking of overlapping orthomosaics to identify areas and features of change, i.e., gully and ditch blocking, dams, vegetation recovery, etc. (all levels);

Index-based change: using multiband (vegetation) indices as proxies for change associated with restoration success, seasonality or biomass fluctuations and hydrological and/or peatland condition (available for levels 2b and 3);

Volumetric change: identification of depletion and accumulation of material across a target area by subtracting two DSMs from each other in a GIS. Depending on the vertical accuracy of the UAV-derived datasets, this analysis can for example yield erosion rates of peat-hags on degraded peatlands or estimate biomass change post-restoration, or, peat-slide risk and/or impact assessments (available for levels 2b and 3).

If the data level requirements are the same in subsequent surveys and comparable land cover classification steps have been executed, additional change analyses steps can be explored:

Actual classification-based change: automatic detection of changes in land cover classes, based on actual location of features to identify for example post-restoration vegetation establishment through recovery (available for levels 2b and 3);

Coverage classification-based change: estimation of land cover class changes in the AOI: i.e., percentage change or pixel- or area-based change. Does not technically require accurate overlap when compared two surveys, however does require comparable land cover types (all levels).

Products, metadata and storage

Currently we only collect and store UAV-derived products that fall within one of the categories listed per use case as shown in Table 6.

| Use case | Dataset | Format | Example |

|---|---|---|---|

|

Promotional purposes |

- |

- |

- |

|

- |

Image(s) |

JPG, PNG, IMG |

Single image or set for promotional use |

|

- |

Video |

MOV, MP4 |

Footage |

|

Monitoring purposes |

- |

- |

- |

|

- |

Imagery |

GeoTIFF, JPG, PNG, IMG |

Single image or set for monitoring |

|

- |

Orthophoto |

GeoTIFF |

Orthorectified (mosaick of) image(s) |

|

- |

Digital surface model |

GeoTIFF |

Elevation model |

|

- |

Processed raster |

GeoTIFF |

Classified orthophoto/DSM, drainage model, etc. |

|

- |

Processed features |

GPKG |

Points, lines and/or polygons used for monitoring |

Products such as UAV-derived imagery, digital elevation models and/or processed datasets require specific (additional) metadata. This to ensure proper storage and future use of the data within or outwith Peatland ACTION. Standard metadata elements (Table 7) are based on UK GEMINI standards (v2.3) as used all of our projects.

| Requirement level | Element name | Description | Please complete for your data: (suggestions have been provided some fields) |

|---|---|---|---|

|

Mandatory elements |

- |

- |

- |

|

- |

Title |

Name of dataset |

- |

|

- |

Dataset language |

What language were the data recorded in? |

English |

|

- |

Abstract |

Short description |

- |

|

- |

Topic |

What is your data about? |

- |

|

- |

Keywords |

Search terms |

UAV, Peatland ACTION |

|

- |

Temporal extent |

Time range of your data |

YYYY-MM-DD / YYYY-MM-DD |

|

- |

Dataset reference date |

Date data were submitted |

YYYY-MM-DD |

|

- |

Lineage |

How did you produce the data? |

Site visit, UAV flight(s) with DJI Phantom 4, e.g. post-processing, number of bands |

|

- |

Extent |

Where the data came from? |

- |

|

- |

Spatial reference system |

Coordinate reference system used |

British National Grid (ESPG: 27700) |

|

- |

Data format |

Type of data |

GeoTIFF raster, geopackage, etc. |

|

- |

Responsible organisation |

Responsible for the establishment, management, maintenance and distribution of the data resource |

NatureScot (Peatland ACTION) |

|

- |

Limitations on public access |

Is there any sensitivity around these data? |

- |

|

- |

Metadata date |

When this metadata form was completed |

- |

|

- |

Metadata point of contact |

Who can be contacted for information about this metadata |

Your contact details |

|

Additional recommended elements where applicable |

- |

- |

- |

|

- |

Dataset history |

Number of images in data, camera type, resolution |

- |

|

- |

Additional information |

- |

- |

For products obtained within the promotional purposes, only a title, abstract, topic, keywords and temporal extent are required.

For storage requirements, ensure your project details for the use case are defined as outlined in the introduction flow chart (e.g. typically submit the data in a compressed .zip folder or a secure Galaxkey repository via a provided link).

Licensing and sharing

All UAV-data obtained as part of a Peatland ACTION or NatureScot promotional or monitoring purposes are expected to be made available under an Open Government Licence (OGL).

For more details on data licence and sharing capabilities, please contact the Peatland ACTION Data Team or the NatureScot GIG Earth Observation team.

Glossary

Area of interest (AOI)

Target area identified for a (UAV-) survey.

Digital surface model (DSM)

A spatial model that only contains elevation data of an area. When non-topographic features are included in the model (i.e., vegetation or tall infrastructure), it is often described as a surface model.

Gimbal

Pivoting support that allows rotation of an attached object (i.e., UAV-camera) along one or more axes. On UAVs, these gimbals can help increase camera stability and allow for different image capture angles.

Global navigation satellite system/Global positioning system (GNSS/GPS)

GNSS is an umbrella term for all global satellite position systems (GPS) and includes the use of satellites to determine a location on the earth’s surface. Commonly, UAVs are fitted with a GPS functionality or can estimate a more accurate location from auxiliary positioning platforms, such as an RTK (real-time kinematics) base stations.

Ground control point (GCP)

Points on the surface – within your area of interest (AOI) – of known location used to geo-reference spatial data (i.e., UAV-derived imagery and elevation data). In practice these can be reflective markers in the field or QR targets for automatic identification in post-processing steps. Typically GCP information includes latitude, longitude and elevation data obtained using accurate GNSS/GPS.

Ground-sampling distance (GSD)

Often referred to ‘data resolution’ of imagery, meaning the actual size of the pixels in real life.

LiDAR

Stands for Light Detection and Ranging and describes the technique of using light pulses to estimate distance between the sensor (i.e., a UAV-LiDAR sensor) and the target (e.g., peatland surface and vegetation) through the means of reflection. Metrics and characteristics associated with the reflected light pulses can provide information not only on elevation, but also on target type (i.e., vegetation, water or hard surfaces).

Orthomosaic

Product of aerial images that are stitched together using photogrammetry software, which are orthorectified to produce a correct image of the surveyed scene by removing any lens or camera position (tilt) effects from the images.

Photogrammetry

Describes a technique – or actually science – to obtain three-dimensional measurements from photographs. Typically, this process involves obtaining overlapping images covering the area of interest (AOI) from which an orthomosaic can be derived. Also described as Structure from Motion (SfM).

Multiband

Refers to the selection of spectral regions available on a camera sensor. Often related to sensors capable of capturing wavelengths beyond the red-green-blue spectrum.

Near-infrared (NIR)

Spectral region ranging between 0.75-3.00 µm

Restoration footprint

Area associated with restoration impact on a site.

Structure-from-Motion (SfM)

A photogrammetry technique to derive three-dimensional information from overlapping imagery.

Supervised classification

User-defined classification of a dataset. In this guidance, we refer to spatial classification (i.e., surface mapping) of peatland areas. This means that the user has to provide a training dataset to the classification algorithm (not further covered in the guidance) in order to classify the input data (e.g., UAV-imagery). The user determines what ‘classes’ are available to the classifier (i.e., bare peat, water, different vegetation groups) and will only be able to ‘map’ these from the input data.

Storyboard

Broad outline (in text) of a UAV flight which details the area of interest (AOI) and expected scenes to be covered in – usually only – promotional purposes. Information on the added value of to-be obtained images and footage should also be included for each flight.

Training dataset

A dataset containing information to train a (classification) model. In the case for UAV-imagery, a training dataset can provide the associated red, green and blue (and/or more bands) values for different peatland cover types. (Micro-)topographic information can also be included to increase classification accuracy when certain cover types are linked to specific settings on the surface.

UAV (unoccupied aerial vehicle)

Commonly known as a drone, is an aircraft that is piloted by a ground-based controller. A wide range of UAVs exist, including rotorcrafts and fixed-wing drones.

Unsupervised classification

A classification method that does not require any prior input data from the user (see Supervised classification), which can yield valuable results when no training data is available. Unsupervised classification algorithms (not further covered in the guidance) can approach a ‘mapping’ exercise in different ways. This can range from relatively simple clustering techniques to artificial neural network analyses.

Validation dataset

In order to determine the accuracy of a (supervised) classification model, the user should compare the output with a (predefined) validation dataset. This dataset typically is a subset of the training dataset that was used for teaching the model in the first place. Depending on the type of accuracy being determined, this validation dataset can either be derived prior to the classification, or can be part of the entire training dataset as well (i.e., sampled after the classification).

Useful references

This is not a full list of useful references, and users are free refer to different literature or online courses. Peatland ACTION and NatureScot do not endorse, sponsor or recommend any of the below listed resources, as the main aim of the list is to provide some examples to the users of this guidance document.

Peatland UAV use reviews

Dronova, I., Kislik, C., Dinh, Z., & Kelly, M. (2021). A review of unoccupied aerial vehicle use in wetland applications: Emerging opportunities in approach, technology, and data. Drones, 5(2), 45.

Jeziorska, J. (2019). UAS for wetland mapping and hydrological modelling. Remote Sensing, 11(17), 1997.

Habitat Restoration in England’s Hills and Mountains: A Novel Application of Unmanned Aerial Vehicle (UAV) Technology (2017).

Multisensor/spectral applications

Beyer, F., Jurasinski, G., Couwenberg, J., & Grenzdörffer, G. (2019). Multisensor data to derive peatland vegetation communities using a fixed-wing unmanned aerial vehicle. International Journal of Remote Sensing, 40(24), 9103-9125.

Lendzioch, T., Langhammer, J., Vlček, L., & Minařík, R. (2021). Mapping the groundwater level and soil moisture of a montane peat bog using UAV monitoring and machine learning. Remote Sensing, 13(5), 907.

Räsänen, A., Aurela, M., Juutinen, S., Kumpula, T., Lohila, A., Penttilä, T., & Virtanen, T. (2020). Detecting northern peatland vegetation patterns at ultra‐high spatial resolution. Remote Sensing in Ecology and Conservation, 6(4), 457-471.

Räsänen, A., Juutinen, S., Kalacska, M., Aurela, M., Heikkinen, P., Mäenpää, K., ... & Virtanen, T. (2020). Peatland leaf-area index and biomass estimation with ultra-high resolution remote sensing. GIScience & Remote Sensing, 57(7), 943-964.

UAV-LiDAR/Photogrammetry

Lovitt, J., Rahman, M. M., & McDermid, G. J. (2017). Assessing the value of UAV photogrammetry for characterizing terrain in complex peatlands. Remote Sensing, 9(7), 715.

Kalacska, M., Arroyo-Mora, J. P., & Lucanus, O. (2021). Comparing UAS lidar and structure-from-motion photogrammetry for peatland mapping and Virtual Reality (VR) visualization. Drones, 5(2), 36.

Mark Brown. Pennine PeatlandLIFE. UAV Applications for blanket bog restoration and monitoring (2020). Presentation